At the end of 2024, I had completed all the coursework for Aalto University’s Master’s Programme in Automation and Electrical Engineering, and it was time to choose a topic for my thesis with Prosys OPC. After a few initial discussions, the company’s CEO, Pyry Grönholm, proposed an intriguing idea: what if we could analyze the time series data that flows through many of our software products? The field of time series analysis was an uncharted territory both for the company and myself, differing greatly from the usual OPC UA related topics.

Time series is a common data format consisting of measurements recorded at regular intervals and arranged in time order. The field of time series analysis refers to analyzing certain aspects of this data. My thesis covers two of the most popular areas in this field, which are forecasting and anomaly detection. In forecasting, we try to predict future data based on past measurements, while in anomaly detection the aim is to find abnormalities from the data. Time series analysis has taken massive steps in recent years thanks to the development of deep learning, where neural networks are used to solve complex tasks with a large amount of data. My thesis focuses on industrial time series data that are often sensor measurements from machines and automation processes. The aim was to study and benchmark modern deep learning -based models on this type of data.

There are many relevant use-cases and benefits in analyzing the data coming from your industrial automation system. Anomaly detection allows condition monitoring and predictive maintenance which are two of the most impactful: by detecting early signs of equipment wear or unusual behavior, companies can prevent unplanned downtime and keep machines from breaking. Forecasting, on the other hand, helps with planning the process and can even predict when something unusual might happen. While the analysis can be done with simple algorithms, modern time series analysis models work better on complex type of data and do not require much manual configuration.

The full thesis is available in Aalto’s archive.

Test Setup

The practical part of my thesis centered around testing different time series analysis models on diverse industrial datasets. These datasets consisted of various measurements, such as temperature, pressure or water level, each with thousands of data points. Some of the datasets also had known anomalies. These anomalies can appear as unusually high or low values, sudden spikes, or changes in the overall data pattern.

Using these datasets, I benchmarked a couple dozen of the most popular time series analysis models. In forecasting, I ranked them based on the absolute error between the forecast and the actual value. For anomaly detection, I used the optimal F1-score that describes a balance between finding all anomalies with as few false positives as possible.

Results

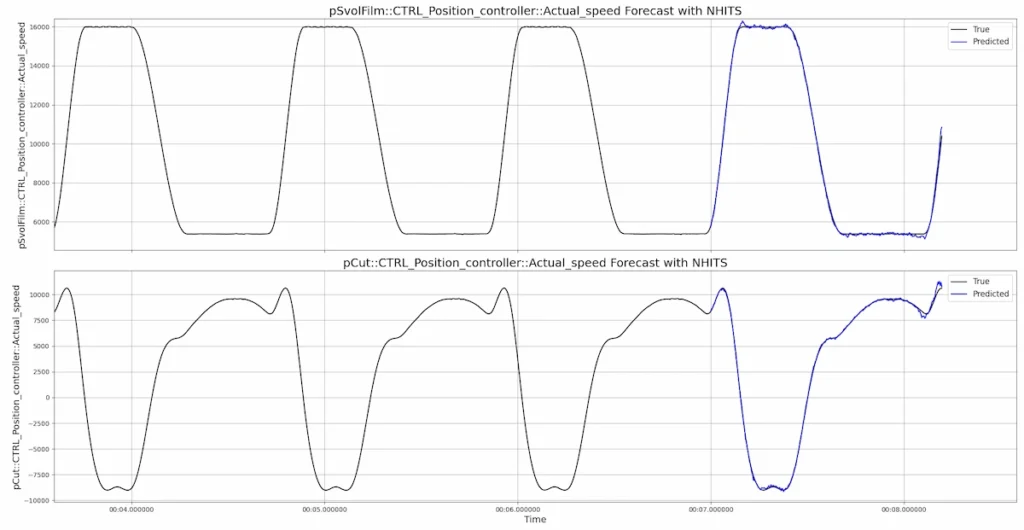

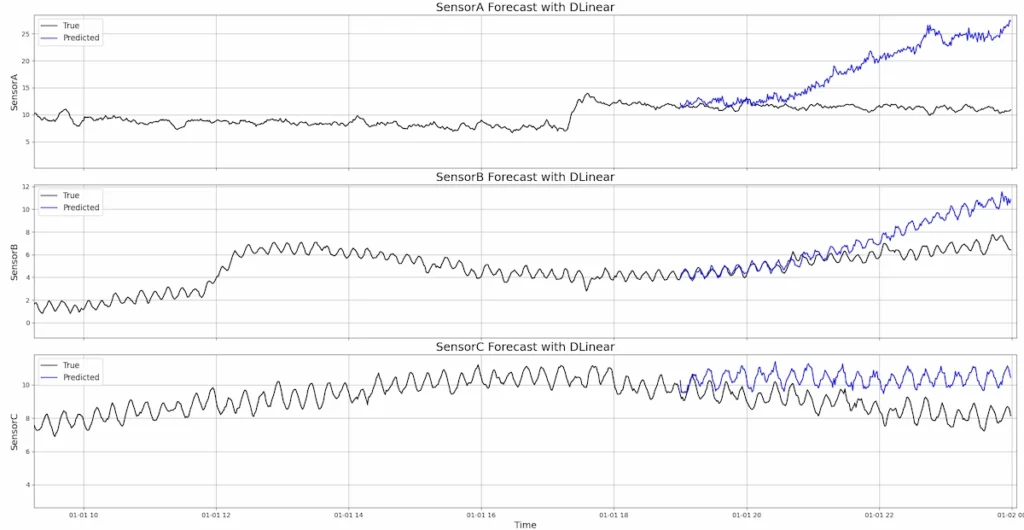

In time series forecasting, the models can roughly be divided into four architectures, which are Recurrent Neural Networks (RNN), Convolutional Neural Networks (CNN), Transformers and Multi-Layer Perceptrons (MLP). The major finding of my thesis was that the MLP-based models consistently outperformed the other architectures. This is good news since these models are also the lightest in terms of computation time, which meant that they are fast enough to compute without a GPU within a reasonable amount of time. A model named NHITS was the most accurate in the benchmarks, while NLinear and DLinear best combined both accuracy and computation time.

From the forecasts in the first image below, we can see that best models are able to accurately forecast periodic data. The second image showcases how the models can capture the pattern of the data quite well, but as the forecasting horizons extends, the trend of the data often loses accuracy.

In anomaly detection, there are two main approaches, which are based on forecasting and reconstruction. My thesis focuses on the latter, where the model is trained with normal data without anomalies. It learns to accurately reconstruct normal data after it first compresses it, but once abnormal data is fed to the model, the reconstruction is poor and this would be flagged anomalous. Models named MAD-GAN, VAE and LSTM-AD were found to be the most accurate anomaly detection models in the benchmarks. Same major challenges in anomaly detection are setting the sensitivity and selecting the window size, which determines how large a chunk of data is analyzed at once.

The image below shows machine temperature data where the dataset provider informed of three incidents of machine failures, marked with green. The red dots were the anomalies detected by the MAD-GAN anomaly detection model. The lower plot shows the anomaly score where a higher value indicates a higher probability of abnormal behavior. As we can see, the model was able to detect all anomalies, although it was set slightly too sensitive and therefore detected some smaller spikes as well.

Conclusions and Future Work

The main conclusion of my thesis was that modern deep learning -based time series analysis models can be a viable option to be used for industrial data but their actual applicability heavily depends on the nature of the data. Excessive randomness in the data can make it impossible to analyze. Also, intended changes it automation process can easily be flagged by the anomaly detection models, and also be difficult to forecast. For these reasons, the results produced by such models should never be taken at face value. Instead, they should be treated as decision-support tools that complement domain expertise rather than replace it.

As a continuation to my thesis, I have started to develop a prototype plugin for OPC UA Forge that can be used to analyze the time series data through its history API. It has a web UI similar to Forge where the user can easily the run the models with the settings of their choosing. The results are plotted where you can see the predicted data or the spotted anomalies. In the future, these results could perhaps be sent back to Forge, for example as OPC UA alarm events. The continuation of this project depends heavily on whether our customers would find this type of functionality useful in their actual industrial environment. If you are interested in such feature or would even like to test the prototype plugin, please contact our sales team.

Artturi Korhonen

Software Engineer